Top 5 Reasons to Switch to Power BI Gen2

You should definitely switch your Power BI Premium capacities to Gen2 although you might wait until it goes GA because Gen2 is currently in preview if you’re risk-averse. I switched an enterprise client P2 node to Gen 2 a few months ago and here are the top benefits we observed:

- Lower CPU utilization

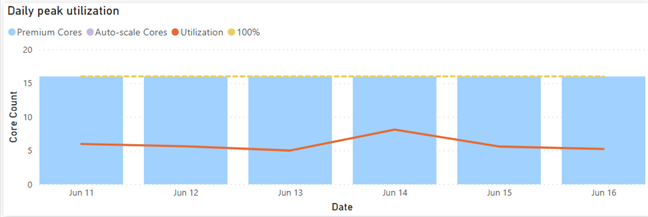

The Gen1 P2 node was under CPU pressure so the client was considering upgrading to P3. This went away after switching to Gen2 as the graph below shows. That’s because each operation essentially has access to all the cores on the node (which is essentially a P3 node). This can give you a nice boost to performance as well. This doesn’t mean that you get more cores for free. If Power BI detects that the CPU used by the capacity (across all its datasets/dataflows/etc.) is exceeding the CPU that you have purchased, then subsequent operations would be throttled (delayed).

- More memory

Imported models are memory-resident so memory is usually the most constraining factor. With Gen2, the capacity maximum memory applies to the resource itself and not collectively across all resources in the capacity. Let’s say you are on a P1 plan which has a maximum memory capacity of 25GB. With Gen 1, you won’t be able to have two datasets, let’s say 20GB and 10GB, loaded at the same time. However, Gen2 will apply the 25GB limit to each dataset. So, each resource (dataset, report, dataflow) will be boxed within 25 GB. This feat is possible because Gen2 uses a SaaS approach, which means datasets are scattered across multiple cluster nodes instead of being associated with a dedicated capacity. A potential downside, however, could be “noisy neighbor” because a P3 cluster node may co-host datasets from different customers. - Less “out of memory” refreshes

Related to item 2, dataset refreshes now have more room. Assuming P1, a 10GB dataset is likely to refresh successfully regardless of other datasets loaded in the same capacity. As a rule of thumb, a dataset will require at least twice the memory to fully refresh, so a full refresh of the 20GB dataset is likely to run out of memory. However, you should be able to utilize less memory if you process specific partitions in large tables or configure them for incremental refresh. - Less management overhead

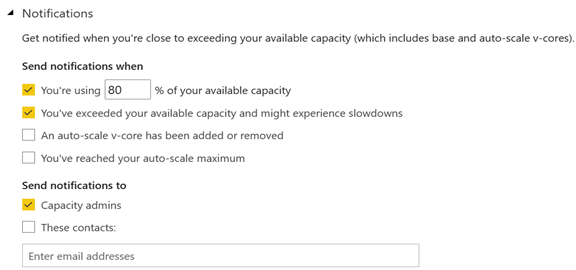

All activities running on the cluster are metered so it’s easy to understand when the capacity is overutilized by using the Gen2 utilization app. This removes the need to monitor Gen1 capacities proactively for signs of overutilization. Further, capacity admins can subscribe for notifications.

- No additional cost

The best for last. You get all the above without paying more!

What I like to see improved in future:

- Customized capacity limits, such as when more memory but less cores are needed.

- More granular auto-scale. The current auto-scaling mechanism keeps the “ad hoc” cores for 24 hours. Ideally, I’d like to see the same auto-scaling mechanism as Azure SQL Database Serverless where the system auto-scales within minutes and can hydrate if database is not in use. Of course, this should apply to also removing provisioned cores, such as a P1 plan downgrading to less than 8 cores.

- Better utilization monitoring app. The current app has left vast areas for improvement. For example, it doesn’t currently report the memory utilization at all. The app should report memory utilization per dataset and refresh so that you can answer the question “why my refresh ran out of memory”.