Data Integration Options with Cloud Providers

It’s unlikely to envision a data analytics solution without having to ingest data from some cloud vendor. I previously ranted here about cloud prohibitors – cloud providers that negate the benefits of moving to the cloud by enforcing all sorts of hoops to get to your data. This blog summarizes a few data integration options with cloud vendors from best to worst.

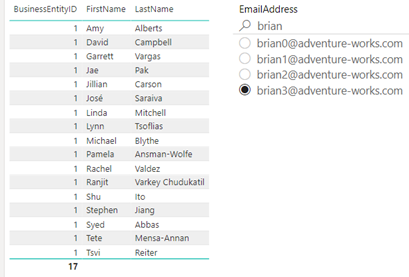

- Direct access to the database – This is by far your best option. If the provider allows you to access the data by directly connecting to its native storage, you should take it even if it involves higher fees, such as upgrading to a “premium” tier (a travesty considering that you didn’t have pay higher fees with their on-prem installations). There are no insurmountable engineering barriers for the vendor to provide direct access to the data in its native storage, but very few cloud vendors do it citing “issues”, such as security, impact on operational processes, etc. Here is a free piece of advice to cloud vendors: provide direct access to the underlying relational database and use this as a big differentiator against the competition. Security and performance should be on you and not the customer.

- Data staging – If the direct access is not an option, the second best would be for the vendor to stage the data out, ideally to a relational database you provision, such as by using the Dynamics Data Export add-in to export data from Dynamics Online (now regretfully deprecated by Microsoft in favor of staging to data lake). Unfortunately, it looks like the norm nowadays is to export to a data lake as flat files and the extraction path becomes relational database -> flat files -> relational database. I hope that makes sense to you because it doesn’t to me. Things to watch out here for are where is the data lake located (Azure, AWS, others) and what integration options are provided. For example, only major ERP vendor supports only S3 as a data lake and provides and a JDBC driver to connect it (JDBC is a Java-based connector that can’t be used by any Microsoft-based integration tool.

- Data push as flat files – Smaller and more flexible cloud providers might be willing to push the data to a storage that you provide. In such cases, you should strongly consider Azure data lake instead of FTP. For example, one cloud provider I integrated with used Ruby to call the Azure API to post data extracts to the client’s data lake storage that we set up.

- REST APIs – Most cloud integration scenarios will fall in this bucket so that the vendor ensures “secure” and “controlled” access to data. The reality is that many of these REST APIs are horrible in both implementation and throughput. For example, the REST APIs of another ERP vendor couldn’t handle a batch export of 500,000 rows (a dataset that can fit into an Excel spreadsheet and be emailed around mind you). Their server would time out because of the “massive” data, and the client was asked to use callback APIs to chunk the export which of course wouldn’t either. And complexity only grows from here as some implementations require result paging, error handling, etc. forcing you to write custom code.

- Manual export – If the above options are not possible, your last resort might be to use the vendor app and export the data manually. So much about automating the data integration…

In summary, when evaluating a cloud vendor, don’t forget to ask how you can access your data. Extracting data by connecting to its native storage (typically a relational database) is the best option for integrating with cloud data sources, as the data is in its native format, you can fold operations, such as filtering and sorting, you can extract data incrementally, or even build SQL views if the vendor allows it. Unfortunately, the norm nowadays is to force you to call the vendor’s API, so the extraction effort in your ETL is on you and not the vendor.