Atlanta MS BI and Power BI Group Meeting on February 6th (Lakehouse in an Hour)

Please join us for the next meeting on Monday, February 6th, at 6:30 PM ET. Patrick LeBlanc (Principal Program Manager at Microsoft and Guy in a Cube) will show you how to implement a lakehouse with Delta lake, Azure Data Factory, and Synapse. For more details and sign up, visit our group page.

WE ARE RESUMING IN-PERSON MEETINGS AT THE MICROSOFT OFFICE IN ALPHARETTA. WE STRONGLY ENCOURAGE YOU TO ATTEND THE EVENT IN PERSON FOR BEST EXPERIENCE. PLEASE NOTE THAT GUESTS ENTERING MICROSOFT BUILDINGS IN THE U.S. MUST PROVIDE PROOF OF VACCINATION OR SELF-ATTEST WITH HEALTHCHECK (HTTPS://AKA.MS/HEALTHCHECK). ALTERNATIVELY, YOU CAN JOIN OUR MEETINGS ONLINE VIA MS TEAMS. WHEN POSSIBLE, WE WILL RECORD THE MEETINGS AND MAKE RECORDINGS AVAILABLE AT HTTPS://BIT.LY/ATLANTABIRECS. PLEASE RSVP ONLY IF COMING TO OUR IN-PERSON MEETING.

Presentation: Lakehouse in an Hour

Date: February 6th

Time: 6:30 – 8:30 PM ET

Place: Onsite and online

ONSITE

Microsoft Office (Alpharetta)

8000 Avalon Boulevard Suite 900

Alpharetta, GA 30009

ONLINE

Click here to join the meeting

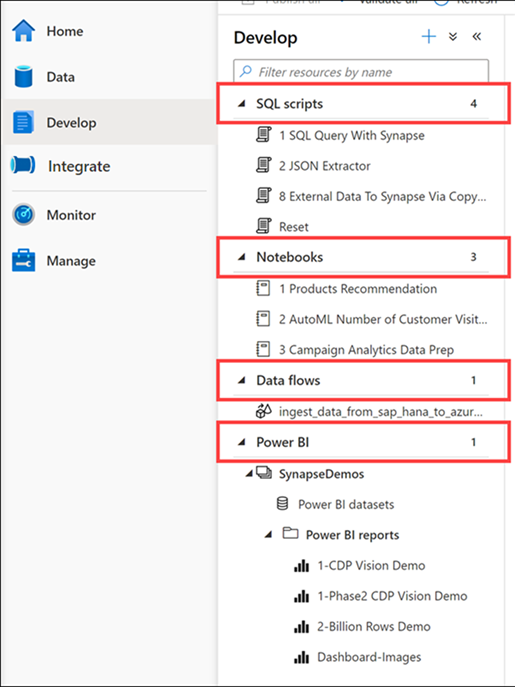

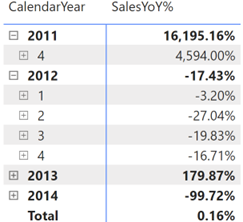

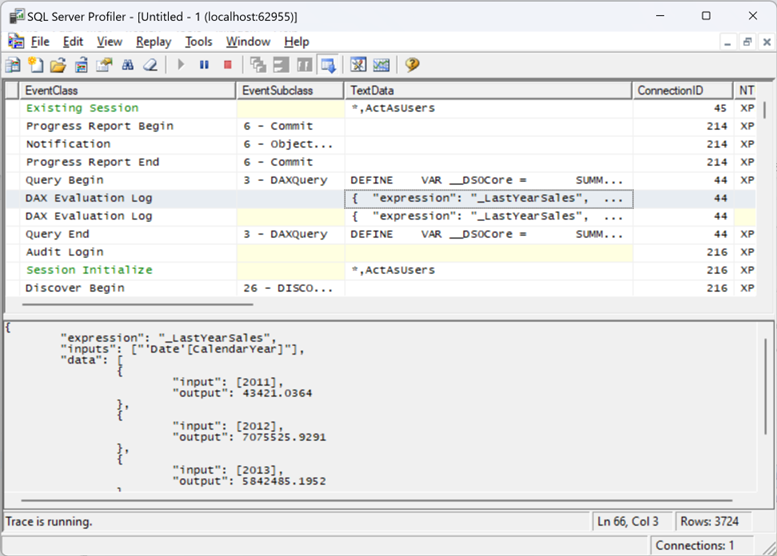

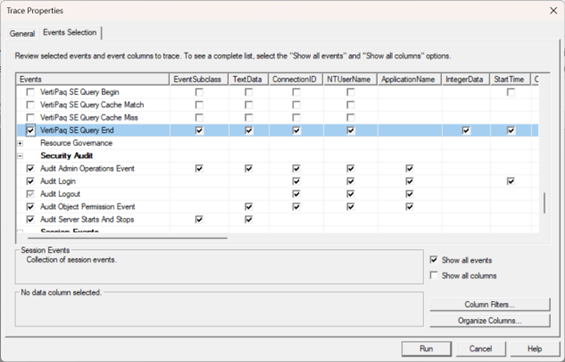

Overview: Join us for an action-packed demo-fueled session where we actually build a lake house from source to report in less than an hour. We will walk you through getting your data from your source system, building out your data lake using Delta, transforming your data with Data Flows, serving it with Serverless SQL Pool and in the end connecting it to Power BI! After this session you will be able to start using all of these technologies and make your Analytical environment a success!

Speaker: Patrick LeBlanc is a currently a Principal Program Manager at Microsoft and a contributing partner to Guy in a Cube. Along with his 15+ years’ experience in IT he holds a Masters of Science degree from Louisiana State University. He is the author and co-author of five SQL Server books. Prior to joining Microsoft he was awarded Microsoft MVP award for his contributions to the community. Patrick is a regular speaker at many SQL Server Conferences and Community events.

Sponsor: The Community (thank you for your donations!)

Prototypes with Pizza: Power BI latest news