Power BI Large Datasets: The Good, the Bad, and the Ugly

At Ignite 2019 Microsoft announced the public preview of large datasets in Power BI Premium. This is a significant milestone as now datasets can grow up to the capacity’s maximum memory (previously, the max size was 10 GB with P3 plan), thus opening the possibility of deploying organizational semantic models to Power BI. I consider this feature mostly suitable for organizational BI as I don’t imagine business users dealing with such large data volumes. I tested large datasets during its private preview, and I’d like to share some notes.

The Good

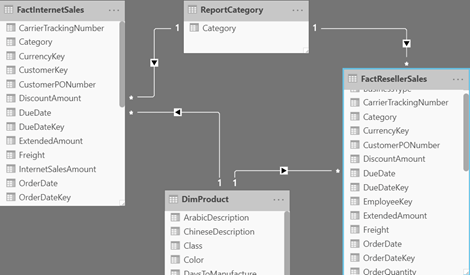

Today, BI developers can deploy organizational semantic models to three Analysis Services Tabular SKUs: SQL Server Analysis Services, Azure Analysis Services, and now Power BI Premium. SQL Server Analysis Services is the Microsoft on-prem offering and it aligns with the SQL Server release schedule. Traditionally, Azure Analysis Services has been the choice for cloud (PaaS) deployments. However, caught in the middle between SQL Server and Power BI, the AAS future is now uncertain given that Microsoft wants to make Power BI as your one-stop destination for all your BI needs. From a strategic perspective, it makes sense to consider Power BI Premium for deploying organizational semantic models because of the following main benefits:

- Always on the latest – Both AAS and SQL Server lag in features compared to Power BI Premium. For example, composite models and aggregations are not in SQL Server 2019 and Azure Analysis Services. By deploying to Power BI, which is also powered by Analysis Services Tabular, your models will always be on the latest and greatest.

- Feature parity – As I explain in my “Power BI Feature Discrepancies for Data Acquisition” blog, some Power BI features, such as Quick Insights, Explain Increase/Decrease, Power Query, are not supported with live connections to Analysis Services. By hosting your models in Power BI Premiums, these features are now supported because Power BI owns the data, just like you import data in Power BI Desktop and then publish the model.

The Bad

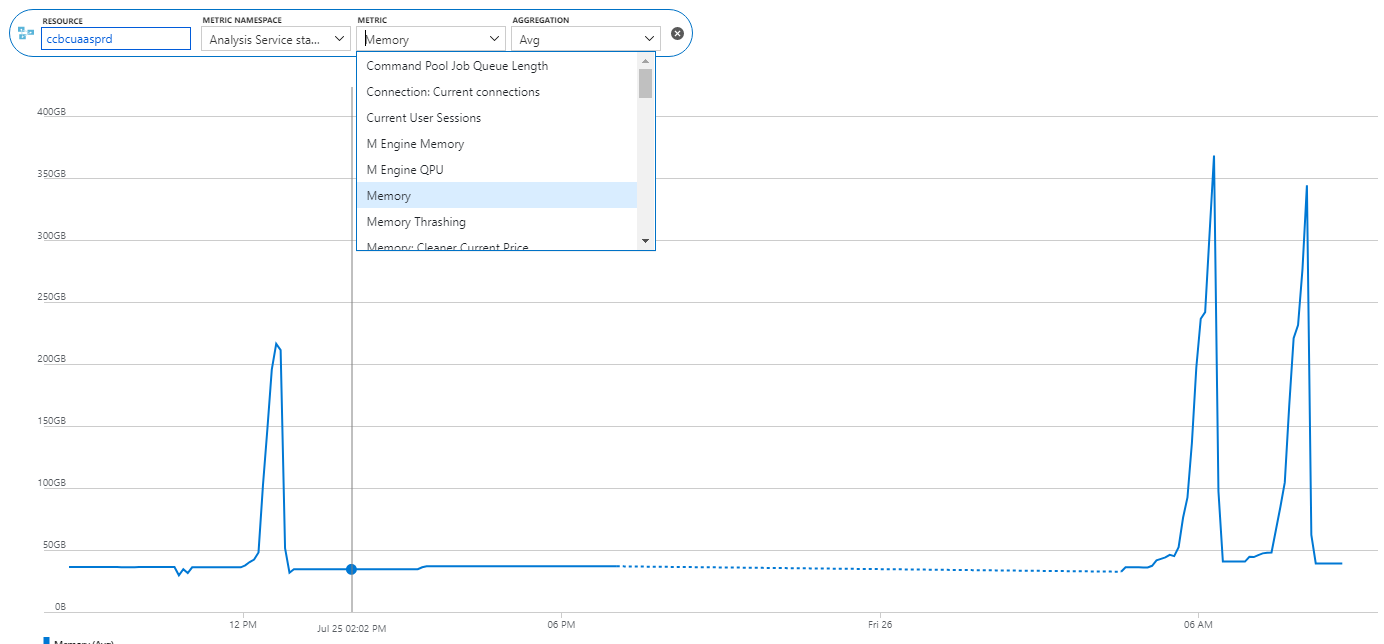

As a Power BI Premium feature, large datasets will require planning and purchasing a premium capacity. Given that you need at least twice the memory to fully process a model (less memory should be required if you process incrementally), you must size accordingly. For example, a 15 GB model would require at least 30 GB of memory to fully process, bringing you into the P2 plan territory. Memory is the most important constraint for Tabular. Unlike SQL Server, which doesn’t license by memory (you can add as much memory you like without paying a dime more in licensing fees), Power BI Power BI Premium plans cap the capacity memory. So, you’ll end up having a dedicated P1 or P2 plan for hosting your organizational semantic model, and another P plan(s) for self-service BI.

I’d like to see elastic scaling happening to Power BI Premium at some point in future. Instead of boxing me into a specific plan, which more than likely will be underutilized, I’d like to see Power BI Premium scaling up and down on demand. This should help lowering the cost.

The Ugly

The lack of DevOps in Power BI Premium will put another hole into your budget. Unlike SQL Server, where you pay only for production use, no special DEV or QA environments and licensing options exist in Power BI Premium. So, you must plan for additional premium capacities, such as for three separate capacities: PROD, DEV, and QA (I know of organizations that need many more DevOps environments). At this price point, even large organizations will reconsider the licensing cost of hosting their models in Power BI. How about leaving QA and DEV on prem? This would require coding for the least common denominator which defeats the benefit of deploying to Power BI Premium. You can get innovative and attempt to reduce licensing cost by purchasing Azure A plans for DEV and QA and stopping the A capacities when they are not in use, but I wonder how many organizations will be willing to go through the pain of doing this. The Cloud should make things easier, right?

Large datasets will open another deployment option for hosting organizational semantic models. This might be an attractive option for some organizations and ISVs. Others will find that staying on-prem could lower their licensing cost. Once the Power BI Premium XMLA endpoint supports write operations (promised for December 2019 in the roadmap), BI developers can use a tool of their choice, such as Tabular Editor or Visual Studio (I personally find Power BI Desktop not suitable for organizational model development, mainly because of its slow performance, lack of source control and extensibility) to develop and deploy semantic models that are always on the latest features and unifying BI on a single platform: Power BI.

We all need to share. But until a couple of months ago, a Power BI training or assessment wouldn’t be complete unless I got hammered on the Power BI sharing limitations. Fortunately, Microsoft has addressed most of these and I have now a much better story to tell. And this is the subject of this newsletter.

We all need to share. But until a couple of months ago, a Power BI training or assessment wouldn’t be complete unless I got hammered on the Power BI sharing limitations. Fortunately, Microsoft has addressed most of these and I have now a much better story to tell. And this is the subject of this newsletter.