-

SSRS Query Performance Issues with Parameters

March 18, 2011 / No Comments »

I recall seeing "fast queries in SSMS but slow in SSRS" issues reported a few times in the public discussion list but I've always dismissed them as unrelated to SSRS. Alas, I happened to see one today when a report would take a very long time (7 min) to execute. As usual, the first stop was to take a look at the SSRS Execution Log which showed that all the time was spent in data retrieval. However, executing the report query in SSMS would take a few seconds. In this case, the report had a complex free-text T-SQL SELECT statement that referenced a single report parameter several times in the query. The workaround we found at the end was to declare a variable at the beginning of the query that referenced the parameter once, as follows: DECLARE @invoice_number as int SET @invoice_number = @inv_parameter Then, we referenced the variable...

-

Presenting at TechEd North America 2011

March 16, 2011 / No Comments »

I'll be presenting two breakout sessions at TechEd North America 2011 which will be held this year in my home city of Atlanta. The tentative titles of the sessions are: DBI205: Reporting Services Are We There Yet? – I'll discuss top requested SSRS features, give solutions and workarounds, share more tips with lots of demos, and present reasons to upgrade to SSRS 2008 or R2. DBI330: Can Your BI Solution Scale? - Present practical load testing methodology, show how to load test SSRS and SSAS, analyze results and performance bottlenecks. The sessions are scheduled back to back on Monday, May 16th, from 3 PM to 6 PM. Please join me if you plan to attend TechEd. See ya'll in Hotlanta!

-

Applied PowerPivot Online Training Class

March 6, 2011 / No Comments »

Self-service BI is on the rise. Join me this month for the PowerPivot online class. Learn how self-service BI can help your users build their own BI solutions with minimum involvement from IT. No travel, no hotel expenses, just 100% content delivered right to your desktop! Our intensive online classes teach you the skills to master Microsoft BI to its fullest. Use the opportunity to ask questions and learn best practices. Applied Business Intelligence Training in March NEW! Applied PowerPivot Online Training Class - Only $599 Date: March 29 – March 30, 2010 Time: Noon – 4:00 pm EDT; 9 am – 1:00 pm PDT 8 hours for only $599 You can request custom dates for groups of five or more students. For more information or to register click here!

-

Dundas Analytical Dashboard and Analysis Services

March 3, 2011 / No Comments »

In my Dundas Dashboard and MS PerformancePoint Comparison article, I introduced you to Dundas Dashboard and explained how you can use it to rapidly assemble compelling performance dashboards. With Dundas Dashboard, you can also build analytical dashboards to let users create interactive, web-based reports from OLAP databases, such as Microsoft Analysis Services, as I will show you in this article.

-

Drillthroughs Going Wild

March 1, 2011 / No Comments »

Got bit by an unfortunate bug which has been around for four years since it got reported on connect.microsoft.com with Analysis Services OLAP drillthrough. It caused me almost a day to figure out. Drillthrough actions (both default and explicit drillthrough) won't return any rows on some cells or would return repeating rows for other cells. As I posted on connect the bug springs to action when: The measure group has IgnoreUnrelatedDimensions=False. Bryan Smith provides a great coverage of IgnoreUnrelatedDimensions in this blog. A dimension attribute has IsAggregatable set to False. Setting the dimension default member either using the DefaultMember attribute property or via script doesn't make a difference. In my case, I had a Time Calculations dimension which was a free floater and it had IsAggregatable=False. Excluding the unrelated dimensions from the drillthrough action doesn't make a difference either. The only workaround I've found is to set IgnoreUnrelatedDimensions back to...

-

Unaggregatable M2M Dimensions

January 31, 2011 / No Comments »

I was called upon to troubleshoot an issue with creating an aggregation design for an existing cube. The Design Aggregation Wizard would refuse to design aggregations with the following cryptic message: Errors in the aggregation designer. The 'Perspective' dimension has no attribute for the aggregation design. The server cannot design aggregations when this attribute is missing. Upon a closer look, it turned out the Perspective dimension joins the measure group via a many-to-many relationship. More importantly, its IsAggregatable property was set to False to suppress the All member because it would be meaningless to aggregate data across the members in this particular dimension. Instead, the cube script would set the dimension default member with an ALTER statement. How do we solve this horrible problem? Here is my solution: Set IsAggregatable property of the dimension attribute back to True. Add a scope assignment to the cube script to nuke the All...

-

Estimating Data Compression

January 26, 2011 / No Comments »

Now that the age of in-memory databases has dawned on us, I've been experimenting with different aspects of VertiPaq and I find its technology fascinating. It's safe to say that VertiPaq will propel Microsoft Business Intelligence for years to come with all of its three flavors: PowerPivot (self-service BI), Business Intelligence Semantic Model (corporate BI), and data warehousing (column-based stored indexes). Unlike Analysis Services MOLAP, which compresses data by rows, VertiPaq compresses data by columns. Column-based compression fits business intelligence like a glove because data is typically analyzed by columns. And, the lower the data cardinality (that is the more repeating values a column has), the higher its compression rate will be. Since most columns used for analytics fit this category, you could expect 10-15 times data compression. Consequently, a half terabyte database could potentially fit into 80 GB of memory. Notice that I account for more memory because when...

-

PowerPivot Stories from the Trenches

January 15, 2011 / No Comments »

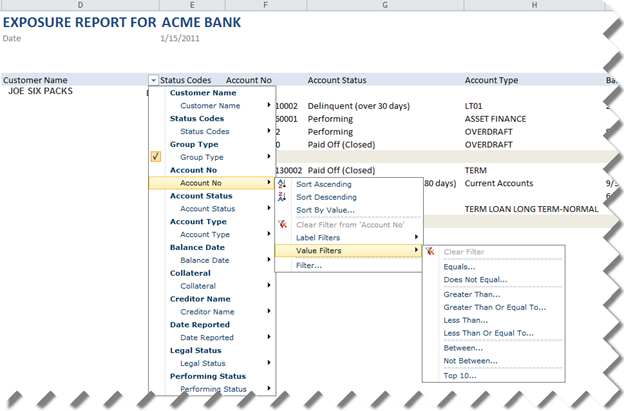

Now that a snow blizzard has paralyzed Atlanta for a week, what a better way to start the new year than sharing a PowerPivot success story. A bank institution has approached Prologika to help them implement a solution to report the customer's credit history so the bank can evaluate the risk for granting the customer a loan. Their high-level initial requirements call for: Flexible searching and filtering to the let the bank user find a particular customer or search for the customer accounts both owned by the bank or externally reported from other banks. Flexible report layout that will let the bank user change the report layout by adding or removing fields. Ability to download the report locally to allow the bank user to run the report when there is no connectivity. Refreshing the credit history on a schedule. Initially, the bank was gravitating toward a home-grown solution that would...

-

PowerPivot Time Calculations

January 15, 2011 / No Comments »

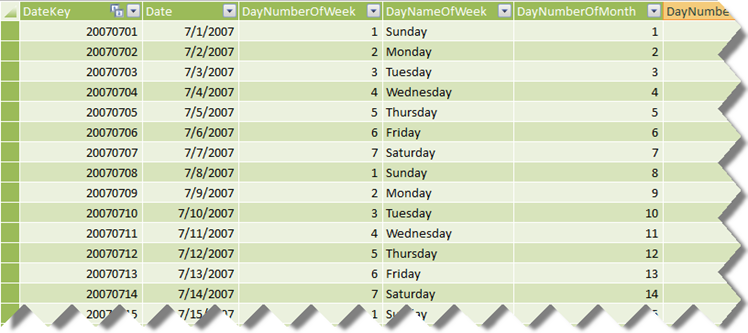

A recommended practice for implementing time calculations in PowerPivot is to have a Data table with a datetime column. Kasper de Jonge explains in more details in this blog. This approach will probably save effort when importing data from normalized schemas and won't require specifying additional arguments to the PowerPivot time functions. However, it will undoubtedly present an issue when importing data from a star schema. A dimensional modeling best practice is to have an integer key for a Data dimension table in the format YYYYMMDD and integer foreign keys in the fact tables. Luckily, you don't have to normalize data back to datetime when building a PowerPivot model on top of star schemas after the issue with the All filter Kasper reported a while back got fixed in PowerPivot RTM. Let's consider the AdventureWorksDW schema. Its DimDate table has an integer key (DateKey). Let's say you import this table...

-

Report Actions for Report Server in SharePoint Mode

December 12, 2010 / No Comments »

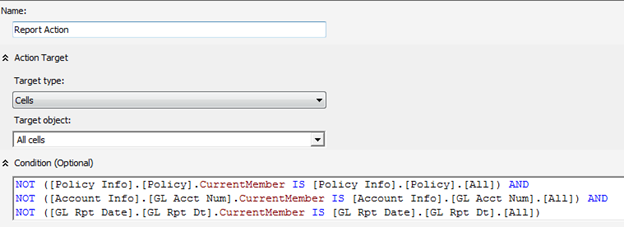

Report actions are an Analysis Services extensibility mechanism that lets end users run a Reporting Services report as they browse the cube. A customer reported that they have trouble setting up a Reporting Services action for a report server configured in a SharePoint integrated mode – a scenario which appears that wasn't tested properly by Microsoft. First, the customer wanted to pass multiple parameters to the report. This was achieved by setting up the report action Target Type to Cells and Target Object to All Cells. This setup allows the user to right-click any cell on the cube to initiate the action. What's more important is that an action-capable browser, such an Excel, will be able to collect the coordinates of all dimensions used in the browser, such as those added to the filter area, so you can pass them as parameters to the report. A condition is further specified...

We offer onsite and online Business Intelligence classes! Contact us about in-person training for groups of five or more students.

We offer onsite and online Business Intelligence classes! Contact us about in-person training for groups of five or more students.